Artificial Intelligence

The risk of AI catastrophe is a contentious point of debate within the overall space of AI issues. There is a need for analysis that clarifies the risk and solutions that address the risk alongside other AI issues.

In 2017, GCRI published a paper Reconciliation between factions focused on near-term and long-term artificial intelligence, which outlined our perspective on solutions and strategy for AI. It addressed the divide that existed between people concerned about the issues that current AI technology was already posing and people concerned about the issues that future AI technology might eventually pose, including future catastrophic risks. The main idea of the paper is to focus on win-win solutions that make progress on both near-term and long-term issues. Since then, much of GCRI’s AI work has helped to develop such win-win solutions, on matters such as collective action, corporate governance, and AI ethics.

In 2024—the time of this writing—we believe this win-win perspective has aged well. We are heartened to see AI governance initiatives covering a multitude of near-term and long-term AI issues. At the same time, we are saddened to see the perpetuation of the same old near-term vs. long-term debates. We continue to believe that it would be more constructive to focus efforts on win-win solutions, or even on single-win solutions, instead of endless debate over which AI issues are more important. People involved in addressing AI issues don’t have to agree on everything in order to partner together for positive change.

Efforts to address the catastrophic risks from AI benefit from being grounded in the actual nature of the risks. Unfortunately, this is easier said than done. All global catastrophic risk analysis faces a fundamental shortage of data—no civilization-ending global catastrophe has ever happened before. Uncertainty about long-term AI risk is compounded by the fact that it involves technology that doesn’t exist yet. To study the risk, some speculation is inevitable and should not be frowned upon. Nonetheless, AI risk and decision analysis can help by pulling together what is known, identifying important uncertainties, and evaluating the implications for decision-making.

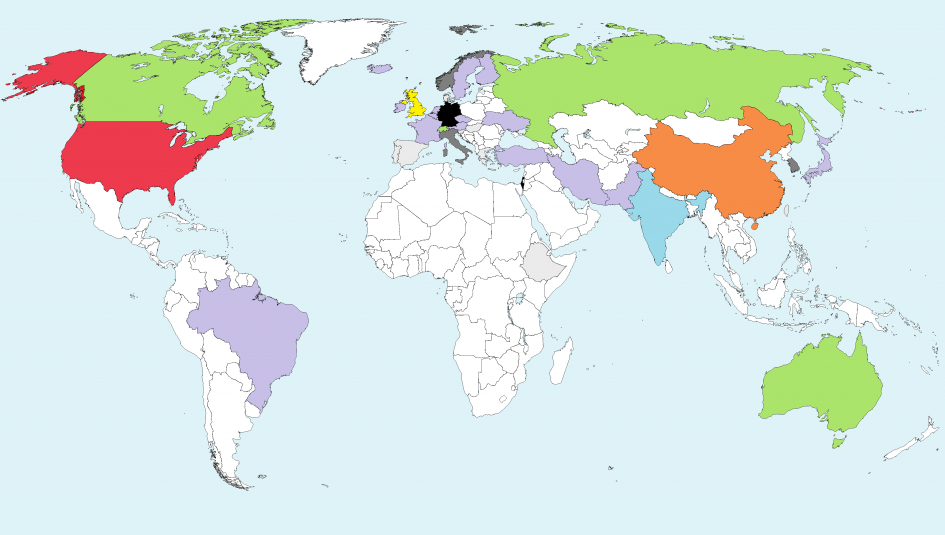

GCRI’s work on AI risk has proceeded along two fronts. First, we have modeled the risk using established risk analysis techniques. Our ASI-PATH model provided a framework for synthesizing and understanding certain concepts of AI risk. The model does not eliminate the uncertainty about AI risk, but it can help to make sense of it, including expert disagreements on it. Second, we have characterized the current state of affairs in AI R&D, with a specific focus on artificial general intelligence (AGI), a potential future type of AI that is closely associated with catastrophic risk. We published reports in 2017 and 2020 that surveyed the landscape of AGI research and development around the world, such as in the map below. These surveys provide a more empirical grounding to work on AI risk.

GCRI’s AI work has also addressed issues in AI ethics. Our work has focused on machine ethics: the ethical concepts to build into AI systems. These concepts define what goals the AI systems will pursue and likewise can be a major factor in the impacts that AI systems have on the world. This holds for all types of AI system, especially for the sorts of high-stakes future systems that may pose a catastrophic risk. As humans build more and more capable AI systems, it becomes important to ask what exactly we want these systems to do.

GCRI’s AI ethics work has critiqued standard AI ethics paradigms such as value alignment and human compatibility. These paradigms often treat machine ethics in monolithic terms, such as “the” value alignment problem, as if there is only one. Our work on social choice ethics shows that in fact, there are many values that AI systems can be aligned to or made compatible with. One reason for this is because human values are diverse. Indeed, the concept of social choice exists to handle these disagreements, such as through voting in elections. Another reason is that there are many beings whose values may merit inclusion in machine ethics. Whereas discussions of machine ethics often focus narrowly on the values of current humans, an ethical argument can be made for also including future humans and various nonhumans. In high-stakes AI systems, whether these beings are included could be the difference between very good and catastrophically bad outcomes for them.

Featured GCRI Publications on Artificial Intelligence

2020 survey of artificial general intelligence projects for ethics, risk, and policy

An AI system with intelligence across a wide range of domains could be extremely impactful—for better or for worse. Traditionally, AI systems have been narrow, focusing on a limited set of domains. This paper surveys the projects seeking to build artificial general intelligence (AGI). It finds 72 projects across 37 countries, mainly in corporations and academia. The projects vary in terms of their stated goals, commitment to safety, and other parameters of relevance to AI governance.

Moral consideration of nonhumans in the ethics of artificial intelligence

Work on AI ethics often seeks to align AI to human values. Arguably, the values of nonhumans should also be included: to exclude nonhumans would be an inappropriate privileging of humans over other entities. If they are excluded, it could result in potentially catastrophic harms to nonhuman populations and other unethical outcomes. This paper documents the limited amount of attention to nonhumans within the field of AI ethics and presents an argument for giving nonhumans adequate attention.

Collective action on artificial Intelligence: A primer and review

To achieve positive outcomes for AI and avoid harms, including catastrophic harms, many people will need to take a variety of actions. In other words, collective action is needed. Fortunately, human society has considerable experience with collective action in other domains, and this has been studied extensively in prior research. This paper provides a two-way primer, helping people with collective action expertise learn more about AI and helping people with AI expertise learn more about collective action.

Full List of GCRI Publications on Artificial Intelligence

Baum, Seth D. Assessing the risk of takeover catastrophe from large language models. Risk Analysis, forthcoming, DOI:10.1111/risa.14353.

Baum, Seth D. and Andrea Owe. On the intrinsic value of diversity. Inquiry, forthcoming, DOI 10.1080/0020174X.2024.2367247.

Baum, Seth D. Manipulating aggregate societal values to bias AI social choice ethics. AI and Ethics, forthcoming, DOI 10.1007/s43681-024-00495-6.

Baum, Seth D. and Andrea Owe, 2023. From AI for people to AI for the world and the universe. AI & Society, vol. 38, no. 2 (April), pages 679-680, DOI 10.1007/s00146-022-01402-5.

Owe, Andrea and Seth D. Baum, 2021. The ethics of sustainability for artificial intelligence. In Philipp Wicke, Marta Ziosi, João Miguel Cunha, and Angelo Trotta (Editors), Proceedings of the 1st International Conference on AI for People: Towards Sustainable AI (CAIP 2021), Bologna, pages 1-17, DOI 10.4108/eai.20-11-2021.2314105.

Baum, Seth D. and Andrea Owe, 2023. Artificial intelligence needs environmental ethics. Ethics, Policy, & Environment, vol. 26, no. 1, pages 139-143, DOI 10.1080/21550085.2022.2076538.

Baum, Seth D. and Jonas Schuett, 2021. The case for long-term corporate governance of AI. Effective Altruism Forum, 3 November.

Galaz, Victor, Miguel A. Centeno, Peter W. Callahan, Amar Causevic, Thayer Patterson, Irina Brass, Seth Baum, Darryl Farber, Joern Fischer, David Garcia, Timon McPhearson, Daniel Jimenez, Brian King, Paul Larcey, and Karen Levy, 2021. Artificial intelligence, systemic risks, and sustainability. Technology in Society, vol. 67 (November), article 101741, DOI 10.1016/j.techsoc.2021.101741.

Cihon, Peter, Jonas Schuett, and Seth D. Baum, 2021. Corporate governance of artificial intelligence in the public interest. Information, vol. 12, article 275, DOI 10.3390/info12070275.

de Neufville, Robert and Seth D. Baum, 2021. Collective action on artificial intelligence: A primer and review. Technology in Society, vol. 66 (August), article 101649, DOI 10.1016/j.techsoc.2021.101649.

Owe, Andrea and Seth D. Baum, 2021. Moral consideration of nonhumans in the ethics of artificial intelligence. AI & Ethics, vol. 1, no. 4 (November), pages 517-528, DOI 10.1007/s43681-021-00065-0.

Cihon, Peter, Moritz J. Kleinaltenkamp, Jonas Schuett, and Seth D. Baum, 2021. AI certification: Advancing ethical practice by reducing information asymmetries. IEEE Transactions on Technology and Society, vol. 2, issue 4 (December), pages 200-209, DOI 10.1109/TTS.2021.3077595.

Fitzgerald, McKenna, Aaron Boddy, and Seth D. Baum, 2020. 2020 survey of artificial general intelligence projects for ethics, risk, and policy. Global Catastrophic Risk Institute Technical Report 20-1.

Baum, Seth D., 2021. Artificial interdisciplinarity: Artificial intelligence for research on complex societal problems. Philosophy & Technology, vol. 34, no. S1 (November), pages 45-63, DOI 10.1007/s13347-020-00416-5.

Baum, Seth D., 2020. Deep learning and the sociology of human-level artificial intelligence – Book review: Artifictional Intelligence: Against Humanity’s Surrender to Computers. Metascience, vol. 29, no. 2 (July), pages 313-317, DOI 10.1007/s11016-020-00510-6.

Baum, Seth D., 2020. Medium-term artificial intelligence and society. Information, vol. 11, no. 6, article 290, DOI 10.3390/info11060290.

Baum, Seth D., Robert de Neufville, Anthony M. Barrett, and Gary Ackerman, 2022. Lessons for artificial intelligence from other global risks. In Maurizio Tinnirello (editor), The Global Politics of Artificial Intelligence. Boca Raton: CRC Press, pages 103-131.

Baum, Seth D., 2018. Countering superintelligence misinformation. Information, vol. 9, no. 10 (September), article 244, DOI 10.3390/info9100244.

Baum, Seth D., 2018. Superintelligence skepticism as a political tool. Information, vol. 9, no. 9 (August), article 209, DOI 10.3390/info9090209.

Baum, Seth D., 2018. Preventing an AI apocalypse. Project Syndicate, 16 May.

Baum, Seth D., Anthony M. Barrett, and Roman V. Yampolskiy, 2017. Modeling and interpreting expert disagreement about artificial superintelligence. Informatica, vol. 41, no. 4 (December), pages 419-427.

Baum, Seth D., 2017. A survey of artificial general intelligence projects for ethics, risk, and policy. Global Catastrophic Risk Institute Working Paper 17-1.

Baum, Seth D., 2017. On the promotion of safe and socially beneficial artificial intelligence. AI & Society, vol. 32, no. 4 (November), pages 543-551, DOI 10.1007/s00146-016-0677-0.

White, Trevor N. and Seth D. Baum, 2017. Liability law for present and future robotics technology. In Patrick Lin, Keith Abney, and Ryan Jenkins (editors), Robot Ethics 2.0, Oxford: Oxford University Press, pages 66-79.

Baum, Seth D., 2020. Social choice ethics in artificial intelligence. AI & Society, vol. 35, no. 1 (March), pages 165-176, DOI 10.1007/s00146-017-0760-1.

Baum, Seth D., 2017. The social science of computerized brains – Book review: The Age of Em: Work, Love, and Life When Robots Rule the Earth. Futures, vol. 90 (June), pages 61-63, DOI 10.1016/j.futures.2017.03.005.

Baum, Seth D., 2018. Reconciliation between factions focused on near-term and long-term artificial intelligence. AI & Society, vol. 33, no. 4 (November), pages 565-572, DOI 10.1007/s00146-017-0734-3.

Baum, Seth, 2016. Should we let uploaded brains take over the world? Scientific American Blogs, 18 October.

Baum, Seth, 2016. Tackling near and far AI threats at once. Bulletin of the Atomic Scientists, 6 October.

Barrett, Anthony M. and Seth D. Baum, 2017. A model of pathways to artificial superintelligence catastrophe for risk and decision analysis. Journal of Experimental & Theoretical Artificial Intelligence, vol. 29, no. 2, pages 397-414, DOI 10.1080/0952813X.2016.1186228.

Barrett, Anthony M. and Seth D. Baum, 2017. Risk analysis and risk management for the artificial superintelligence research and development process. In Victor Callaghan, James Miller, Roman Yampolskiy, and Stuart Armstrong (editors), The Technological Singularity: Managing the Journey. Berlin: Springer, pages 127-140.

Baum, Seth and Trevor White, 2015. When robots kill. The Guardian Political Science blog, 23 June.

Baum, Seth, 2015. Stopping killer robots and other future threats. Bulletin of the Atomic Scientists, 22 February.

Baum, Seth D., 2014. Film review: Transcendence. Journal of Evolution and Technology, vol. 24, no. 2 (September), pages 79-84.

Baum, Seth, 2013. Our Final Invention: Is AI the defining issue for humanity? Scientific American Blogs, 11 October.

Baum, Seth and Grant Wilson, 2013. How to create an international treaty for emerging technologies. Institute for Ethics and Emerging Technologies, 21 February.

Wilson, Grant S., 2013. Minimizing global catastrophic and existential risks from emerging technologies through international law. Virginia Environmental Law Journal, vol. 31, no. 2, pages 307-364.

Image credits: landscape: Buckyball Design; map: Seth Baum; voting line: April Sikorski