Risk & Decision Analysis

For decades, experts have developed robust tools to analyze risks and make sound decisions in the face of uncertainty. However, the uncertainty about global catastrophic risk is unusually large. Risk and decision analysis tools can be applied to global catastrophic risk, but this must be done carefully.

An Introduction to Risk & Decision Analysis

In March 2022, toward the beginning of the Russian invasion of Ukraine, NATO leadership declined Ukraine’s request to implement a no-fly zone to counter Russia’s aerial attacks. NATO leadership cited the risk of a no-fly zone escalating into a larger war between NATO and Russian forces. Similar debates over how to support Ukraine have played out throughout the conflict, with the possibility of escalation all the way to nuclear war constantly lurking and often factoring into the discussion. Like many policy decisions, it is genuinely difficult: a tradeoff between protecting Ukrainian citizens facing an acute and urgent threat and the possibility of escalation to a war that could harm a much larger number of people.

This is exactly the type of situation for which risk and decision analysis can be valuable. The decision-makers, in this case NATO leadership, would benefit from an understanding of what their decision options are and the risks and other factors attached to each of the options, so that they can use their judgment to make a sound decision. When important factors are overlooked or misunderstood, it can lead to bad decision-making, with potentially catastrophic results. Whatever the merits of the no-fly zone may have been (and we at GCRI tend to agree with NATO on this matter), it would be tragic for the world to end up in nuclear war because some governmental leaders neglected to consider escalation risks.

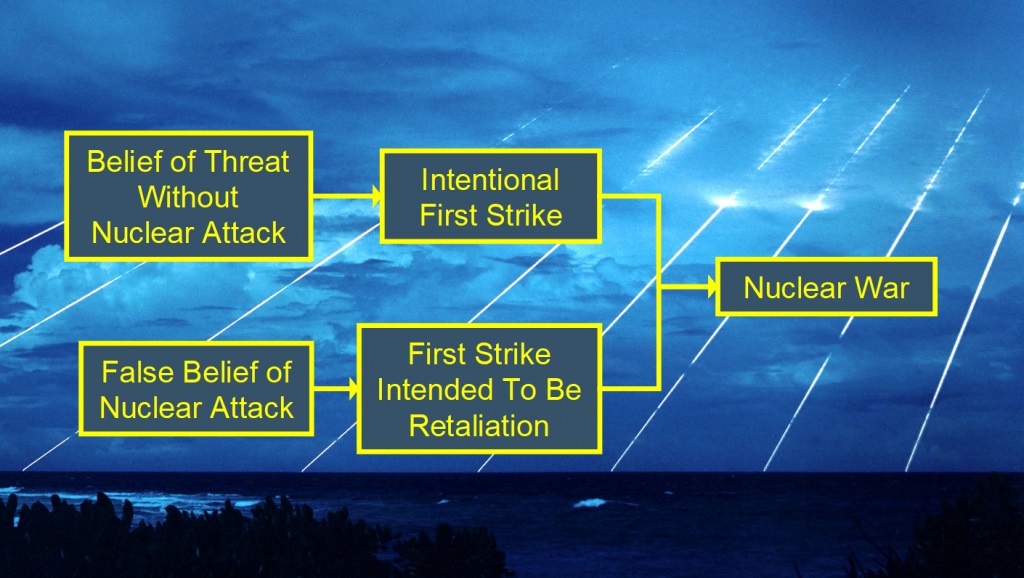

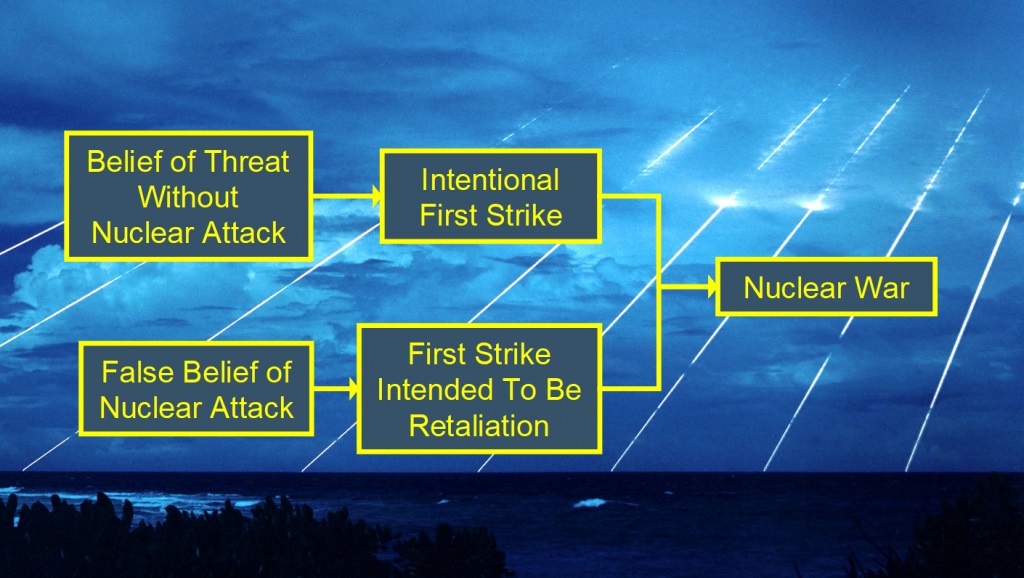

The no-fly zone decision is also a good example of the difficulty of analyzing global catastrophic risks and related issues. While the threat to Ukrainian citizens was quite clear, the risk of escalation was far more ambiguous. How would both sides react if, for example, a NATO plane shot down a Russian plane for violating the no-fly zone? Would Russia retaliate? Would NATO retaliate back? In what form would the retaliation(s) take? When would they stop? Risk analysis might aspire to assign probabilities to various possible outcomes, as well as the severities of harms if the possible outcomes occur, but in this case, there are no clear numbers for the probabilities and severities. The war was (and, at the time of this writing in 2024, still is) a largely unprecedented event, and escalation would depend on the responses of particular human beings to particular events.

Indeed, as GCRI saw firsthand in our own media engagement on the war in Ukraine, opinions on the size of the risk varied widely. For our part, we declined to comment on the size of the risk due to its highly uncertain character, the fast-changing nature of the conditions on the ground (and in the skies), and the tendency for people to latch on (or “anchor”) to specific risk estimates and misinterpret them as being more certain than they actually are. We did this despite there being significant interest in our opinion on the risk and despite us having significant expertise on the risk of nuclear war, such as from our nuclear war probability model sketched below.

Over the years, GCRI has gotten two basic criticisms about our risk and decision analysis: some people say it is too quantitative and others say it is not quantitative enough. A more quantitative approach would follow from a strict interpretation of subjective or “Bayesian” probability theory, in which all parameters are inherently quantifiable, even if it is just the analyst’s subjective best guess. Furthermore, as the argument goes, it is always better to have some quantification, even if it is just a crude guess, so that the numbers can be used to weigh the various factors in a decision calculus. In contrast, a less quantitative approach would acknowledge that there is no rigorous analytical basis for assigning numbers to certain parameters, because the requisite data or other information just isn’t there. Instead, as the argument goes, decision-making should embrace the inherently uncertain nature of the situation and seek correspondingly appropriate courses of action.

We see merit in both of these criticisms, but we respectfully disagree. Yes, it is true that there are deep uncertainties about many issues, including the global catastrophic risks, and that this poses challenges for quantification. However, it is still the case that careful risk and decision analysis can make progress on the risks. And yes, it may be theoretically defensible to quantify all parameters. However, in practice, quantifying deeply uncertain parameters can make decision-making worse, for reasons such as the anchoring and misinterpretation issues noted above. Therefore, our approach is to use structured risk and decision analysis methods to characterize risks and decisions to the extent that the available information reasonably permits, and to proceed cautiously on quantification.

Our longstanding participation in the Society for Risk Analysis has taught us an important lesson about role of risk and decision analysis. Often, the value comes not from the numbers produced by the analysis, such as the probabilities and severities, but instead from the more qualitative insight that emerges from the process of building risk and decision models and using the models as a structure for synthesizing relevant information. These models can help decision-makers wrap their minds around the problems they face, identify important factors and uncertainties, and make informed judgments about what courses of action to take. Our risk and decision analysis proceeds in this spirit, and it likewise supports our own work on solutions and strategy for addressing global catastrophic risk.

For further discussion, please see our article The Role of Risk and Decision Analysis in Global Catastrophic Risk Reduction.

Image credits: no-fly zone protest: Amaury Laporte; probability model background image: United States Army; montage of numbers: Seth Baum

Featured GCRI Publications on Risk & Decision Analysis

A paradox: if natural threats risk to humanity are so large, then why haven’t we been wiped out yet? This paper, published in the journal Natural Hazards, argues that, contrary to prior thinking, this deep human history may actually have little bearing on the current risk from natural sources. Instead, analysis of each risk is needed.

Would the world be safer with or without nuclear weapons? This is one of several important policy questions in which risk analysis arguably should play a central role. However, this paper, published in a UCLA workshop proceedings, reflects on the difficulty of analyzing nuclear war risk and using the analysis in policy.

Information can have value by improving the quality of decision-making. This paper, published in the journal Decision Analysis, develops a framework for the valuation of information about global catastrophic risk and illustrates the framework using examples of information about asteroids/comets and nuclear war.

Full List of GCRI Publications on Risk & Decision Analysis

Baum, Seth, 2025. Government procedure and global catastrophic risk. GCRI Commentary 2025-5, 14 October.

Baum, Seth D., 2024. Climate change, uncertainty, and global catastrophic risk. Futures, vol. 162 (September), article 103432, DOI 10.1016/j.futures.2024.103432.

Baum, Seth D., 2025. Assessing the risk of takeover catastrophe from large language models. Risk Analysis, vol. 45, no. 4 (April), pages 752-765, DOI 10.1111/risa.14353.

Ackerman, Gary, Brandon Behlendorf, Seth Baum, Hayley Peterson, Anna Wetzel, and John Halstead, 2024. The origin and implications of the COVID-19 pandemic: An expert survey. Global Catastrophic Risk Institute Technical Report 24-1.

Baum, Seth D., 2023. Assessing natural global catastrophic risks. Natural Hazards, vol. 115, no. 3 (February), pages 2699-2719, DOI 10.1007/s11069-022-05660-w.

Baum, Seth D., 2022. Book review: The Precipice: Existential Risk and the Future of Humanity. Risk Analysis, vol. 42, issue 9 (September), pages 2122-2124, DOI 10.1111/risa.13954.

Baum, Seth D., 2022. How to evaluate the risk of nuclear war. BBC Future, 10 March.

Baum, Seth D., 2021. Accounting for violent conflict risk in planetary defense decisions. Acta Astronautica, vol. 178 (January), pages 15-23, DOI 10.1016/j.actaastro.2020.08.028.

Baum, Seth D., 2020. Quantifying the probability of existential catastrophe: A reply to Beard et al. Futures, vol. 123 (October), article 102608, DOI 10.1016/j.futures.2020.102608.

Baum, Seth D., 2019. The challenge of analyzing global catastrophic risks. Decision Analysis Today, vol. 38, no. 1 (July), pages 20-24.

Baum, Seth D., 2019. Risk-risk tradeoff analysis of nuclear explosives for asteroid deflection. Risk Analysis, vol. 39, no. 11 (November), pages 2427-2442, DOI 10.1111/risa.13339.

Umbrello, Steven and Seth D. Baum, 2018. Evaluating future nanotechnology: The net societal impacts of atomically precise manufacturing. Futures, vol. 100 (June), pages 63-73, DOI 10.1016/j.futures.2018.04.007.

Baum, Seth D., 2018. Reflections on the risk analysis of nuclear war. In B. John Garrick (editor), Proceedings of the Workshop on Quantifying Global Catastrophic Risks, Garrick Institute for the Risk Sciences, University of California, Los Angeles, pages 19-50.

Baum, Seth, 2018. The role of risk and decision analysis in global catastrophic risk reduction. GCRI Commentary 2018-1, 26 November.

Baum, Seth D., 2018. Uncertain human consequences in asteroid risk analysis and the global catastrophe threshold. Natural Hazards, vol. 94, no. 2 (November), pages 759-775, DOI 10.1007/s11069-018-3419-4.

Baum, Seth D. and Anthony M. Barrett, 2018. A model for the impacts of nuclear war. Global Catastrophic Risk Institute Working Paper 18-2.

Baum, Seth D., Robert de Neufville, and Anthony M. Barrett, 2018. A model for the probability of nuclear war. Global Catastrophic Risk Institute Working Paper 18-1.

Baum, Seth D., Anthony M. Barrett, and Roman V. Yampolskiy, 2017. Modeling and interpreting expert disagreement about artificial superintelligence. Informatica, vol. 41, no. 4 (December), pages 419-427.

Baum, Seth D., 2017. Assessing global catastrophic risk. In Helen Caldicott (editor), Sleepwalking to Armageddon: The Threat of Nuclear Annihilation. New York: New Press, pages 3-10.

Baum, Seth D. and Anthony M. Barrett, 2017. Towards an integrated assessment of global catastrophic risk. In B.J. Garrick (editor), Proceedings of the First Colloquium on Catastrophic and Existential Risk, Garrick Institute for the Risk Sciences, University of California, Los Angeles, pages 41-62.

Barrett, Anthony M., 2017. Value of GCR information: Cost effectiveness-based approach for global catastrophic risk (GCR) reduction. Decision Analysis, vol. 14, no. 3 (September), pages 187-203, DOI 10.1287/deca.2017.0350.

Barrett, Anthony M. and Seth D. Baum, 2017. A model of pathways to artificial superintelligence catastrophe for risk and decision analysis. Journal of Experimental & Theoretical Artificial Intelligence, vol. 29, no. 2, pages 397-414, DOI 10.1080/0952813X.2016.1186228.

Barrett, Anthony, 2016. False alarms, true dangers? Current and future risks of inadvertent U.S.-Russian nuclear war. RAND Corporation, document PE-191-TSF, DOI 10.7249/PE191.

Barrett, Anthony M. and Seth D. Baum, 2017. Risk analysis and risk management for the artificial superintelligence research and development process. In Victor Callaghan, James Miller, Roman Yampolskiy, and Stuart Armstrong (editors), The Technological Singularity: Managing the Journey. Berlin: Springer, pages 127-140.

Baum, Seth, 2015. Breaking down the risk of nuclear deterrence failure. Bulletin of the Atomic Scientists, 27 July.

Baum, Seth, 2015. Should nuclear devices be used to stop asteroids? Bulletin of the Atomic Scientists, 17 June.

Baum, Seth D., 2015. Risk and resilience for unknown, unquantifiable, systemic, and unlikely/catastrophic threats. Environment Systems and Decisions, vol. 35, no. 2 (June), pages 229-236, DOI 10.1007/s10669-015-9551-8.

Baum, Seth, 2015. Is stratospheric geoengineering worth the risk? Bulletin of the Atomic Scientists, 5 June.

Baum, Seth D. and Anthony M. Barrett, 2018. Global catastrophes: The most extreme risks. In Vicki Bier (editor), Risk in Extreme Environments: Preparing, Avoiding, Mitigating, and Managing. New York: Routledge, pages 174-184.

Baum, Seth D., 2014. The great downside dilemma for risky emerging technologies. Physica Scripta, vol. 89, no. 12 (December), article 128004, DOI 10.1088/0031-8949/89/12/128004.

Baum, Seth, 2014. Nuclear war, the black swan we can never see. Bulletin of the Atomic Scientists, 21 November.

Baum, Seth D. and Itsuki C. Handoh, 2014. Integrating the planetary boundaries and global catastrophic risk paradigms. Ecological Economics, vol. 107 (November), pages 13-21, DOI 10.1016/j.ecolecon.2014.07.024.

Haqq-Misra, Jacob, Michael W. Busch, Sanjoy M. Som, and Seth D. Baum, 2013. The benefits and harm of transmitting into space. Space Policy, vol. 29, no. 1 (February), pages 40-48, DOI 10.1016/j.spacepol.2012.11.006.

Barrett, Anthony M., Seth D. Baum, and Kelly R. Hostetler, 2013. Analyzing and reducing the risks of inadvertent nuclear war between the United States and Russia. Science and Global Security, vol. 21, no. 2, pages 106-133, DOI 10.1080/08929882.2013.798984.

Baum, Seth D., Timothy M. Maher, Jr., and Jacob Haqq-Misra, 2013. Double catastrophe: Intermittent stratospheric geoengineering induced by societal collapse. Environment Systems and Decisions, vol. 33, no. 1 (March), pages 168-180, DOI 10.1007/s10669-012-9429-y.

Baum, Seth, 2012. Anticipating catastrophe. FutureChallenges, 26 September.

An Introduction to Risk & Decision Analysis

In March 2022, toward the beginning of the Russian invasion of Ukraine, NATO leadership declined Ukraine’s request to implement a no-fly zone to counter Russia’s aerial attacks. NATO leadership cited the risk of a no-fly zone escalating into a larger war between NATO and Russian forces. Similar debates over how to support Ukraine have played out throughout the conflict, with the possibility of escalation all the way to nuclear war constantly lurking and often factoring into the discussion. Like many policy decisions, it is genuinely difficult: a tradeoff between protecting Ukrainian citizens facing an acute and urgent threat and the possibility of escalation to a war that could harm a much larger number of people.

This is exactly the type of situation for which risk and decision analysis can be valuable. The decision-makers, in this case NATO leadership, would benefit from an understanding of what their decision options are and the risks and other factors attached to each of the options, so that they can use their judgment to make a sound decision. When important factors are overlooked or misunderstood, it can lead to bad decision-making, with potentially catastrophic results. Whatever the merits of the no-fly zone may have been (and we at GCRI tend to agree with NATO on this matter), it would be tragic for the world to end up in nuclear war because some governmental leaders neglected to consider escalation risks.

The no-fly zone decision is also a good example of the difficulty of analyzing global catastrophic risks and related issues. While the threat to Ukrainian citizens was quite clear, the risk of escalation was far more ambiguous. How would both sides react if, for example, a NATO plane shot down a Russian plane for violating the no-fly zone? Would Russia retaliate? Would NATO retaliate back? In what form would the retaliation(s) take? When would they stop? Risk analysis might aspire to assign probabilities to various possible outcomes, as well as the severities of harms if the possible outcomes occur, but in this case, there are no clear numbers for the probabilities and severities. The war was (and, at the time of this writing in 2024, still is) a largely unprecedented event, and escalation would depend on the responses of particular human beings to particular events.

Indeed, as GCRI saw firsthand in our own media engagement on the war in Ukraine, opinions on the size of the risk varied widely. For our part, we declined to comment on the size of the risk due to its highly uncertain character, the fast-changing nature of the conditions on the ground (and in the skies), and the tendency for people to latch on (or “anchor”) to specific risk estimates and misinterpret them as being more certain than they actually are. We did this despite there being significant interest in our opinion on the risk and despite us having significant expertise on the risk of nuclear war, such as from our nuclear war probability model sketched below.

Over the years, GCRI has gotten two basic criticisms about our risk and decision analysis: some people say it is too quantitative and others say it is not quantitative enough. A more quantitative approach would follow from a strict interpretation of subjective or “Bayesian” probability theory, in which all parameters are inherently quantifiable, even if it is just the analyst’s subjective best guess. Furthermore, as the argument goes, it is always better to have some quantification, even if it is just a crude guess, so that the numbers can be used to weigh the various factors in a decision calculus. In contrast, a less quantitative approach would acknowledge that there is no rigorous analytical basis for assigning numbers to certain parameters, because the requisite data or other information just isn’t there. Instead, as the argument goes, decision-making should embrace the inherently uncertain nature of the situation and seek correspondingly appropriate courses of action.

We see merit in both of these criticisms, but we respectfully disagree. Yes, it is true that there are deep uncertainties about many issues, including the global catastrophic risks, and that this poses challenges for quantification. However, it is still the case that careful risk and decision analysis can make progress on the risks. And yes, it may be theoretically defensible to quantify all parameters. However, in practice, quantifying deeply uncertain parameters can make decision-making worse, for reasons such as the anchoring and misinterpretation issues noted above. Therefore, our approach is to use structured risk and decision analysis methods to characterize risks and decisions to the extent that the available information reasonably permits, and to proceed cautiously on quantification.

Our longstanding participation in the Society for Risk Analysis has taught us an important lesson about role of risk and decision analysis. Often, the value comes not from the numbers produced by the analysis, such as the probabilities and severities, but instead from the more qualitative insight that emerges from the process of building risk and decision models and using the models as a structure for synthesizing relevant information. These models can help decision-makers wrap their minds around the problems they face, identify important factors and uncertainties, and make informed judgments about what courses of action to take. Our risk and decision analysis proceeds in this spirit, and it likewise supports our own work on solutions and strategy for addressing global catastrophic risk.

For further discussion, please see our article The Role of Risk and Decision Analysis in Global Catastrophic Risk Reduction.

Image credits: no-fly zone protest: Amaury Laporte; probability model background image: United States Army; montage of numbers: Seth Baum

Featured GCRI Publications on Risk & Decision Analysis

A paradox: if natural threats risk to humanity are so large, then why haven’t we been wiped out yet? This paper, published in the journal Natural Hazards, argues that, contrary to prior thinking, this deep human history may actually have little bearing on the current risk from natural sources. Instead, analysis of each risk is needed.

Would the world be safer with or without nuclear weapons? This is one of several important policy questions in which risk analysis arguably should play a central role. However, this paper, published in a UCLA workshop proceedings, reflects on the difficulty of analyzing nuclear war risk and using the analysis in policy.

Information can have value by improving the quality of decision-making. This paper, published in the journal Decision Analysis, develops a framework for the valuation of information about global catastrophic risk and illustrates the framework using examples of information about asteroids/comets and nuclear war.

Full List of GCRI Publications on Risk & Decision Analysis

Baum, Seth, 2025. Government procedure and global catastrophic risk. GCRI Commentary 2025-5, 14 October.

Baum, Seth D., 2024. Climate change, uncertainty, and global catastrophic risk. Futures, vol. 162 (September), article 103432, DOI 10.1016/j.futures.2024.103432.

Baum, Seth D., 2025. Assessing the risk of takeover catastrophe from large language models. Risk Analysis, vol. 45, no. 4 (April), pages 752-765, DOI 10.1111/risa.14353.

Ackerman, Gary, Brandon Behlendorf, Seth Baum, Hayley Peterson, Anna Wetzel, and John Halstead, 2024. The origin and implications of the COVID-19 pandemic: An expert survey. Global Catastrophic Risk Institute Technical Report 24-1.

Baum, Seth D., 2023. Assessing natural global catastrophic risks. Natural Hazards, vol. 115, no. 3 (February), pages 2699-2719, DOI 10.1007/s11069-022-05660-w.

Baum, Seth D., 2022. Book review: The Precipice: Existential Risk and the Future of Humanity. Risk Analysis, vol. 42, issue 9 (September), pages 2122-2124, DOI 10.1111/risa.13954.

Baum, Seth D., 2022. How to evaluate the risk of nuclear war. BBC Future, 10 March.

Baum, Seth D., 2021. Accounting for violent conflict risk in planetary defense decisions. Acta Astronautica, vol. 178 (January), pages 15-23, DOI 10.1016/j.actaastro.2020.08.028.

Baum, Seth D., 2020. Quantifying the probability of existential catastrophe: A reply to Beard et al. Futures, vol. 123 (October), article 102608, DOI 10.1016/j.futures.2020.102608.

Baum, Seth D., 2019. The challenge of analyzing global catastrophic risks. Decision Analysis Today, vol. 38, no. 1 (July), pages 20-24.

Baum, Seth D., 2019. Risk-risk tradeoff analysis of nuclear explosives for asteroid deflection. Risk Analysis, vol. 39, no. 11 (November), pages 2427-2442, DOI 10.1111/risa.13339.

Umbrello, Steven and Seth D. Baum, 2018. Evaluating future nanotechnology: The net societal impacts of atomically precise manufacturing. Futures, vol. 100 (June), pages 63-73, DOI 10.1016/j.futures.2018.04.007.

Baum, Seth D., 2018. Reflections on the risk analysis of nuclear war. In B. John Garrick (editor), Proceedings of the Workshop on Quantifying Global Catastrophic Risks, Garrick Institute for the Risk Sciences, University of California, Los Angeles, pages 19-50.

Baum, Seth, 2018. The role of risk and decision analysis in global catastrophic risk reduction. GCRI Commentary 2018-1, 26 November.

Baum, Seth D., 2018. Uncertain human consequences in asteroid risk analysis and the global catastrophe threshold. Natural Hazards, vol. 94, no. 2 (November), pages 759-775, DOI 10.1007/s11069-018-3419-4.

Baum, Seth D. and Anthony M. Barrett, 2018. A model for the impacts of nuclear war. Global Catastrophic Risk Institute Working Paper 18-2.

Baum, Seth D., Robert de Neufville, and Anthony M. Barrett, 2018. A model for the probability of nuclear war. Global Catastrophic Risk Institute Working Paper 18-1.

Baum, Seth D., Anthony M. Barrett, and Roman V. Yampolskiy, 2017. Modeling and interpreting expert disagreement about artificial superintelligence. Informatica, vol. 41, no. 4 (December), pages 419-427.

Baum, Seth D., 2017. Assessing global catastrophic risk. In Helen Caldicott (editor), Sleepwalking to Armageddon: The Threat of Nuclear Annihilation. New York: New Press, pages 3-10.

Baum, Seth D. and Anthony M. Barrett, 2017. Towards an integrated assessment of global catastrophic risk. In B.J. Garrick (editor), Proceedings of the First Colloquium on Catastrophic and Existential Risk, Garrick Institute for the Risk Sciences, University of California, Los Angeles, pages 41-62.

Barrett, Anthony M., 2017. Value of GCR information: Cost effectiveness-based approach for global catastrophic risk (GCR) reduction. Decision Analysis, vol. 14, no. 3 (September), pages 187-203, DOI 10.1287/deca.2017.0350.

Barrett, Anthony M. and Seth D. Baum, 2017. A model of pathways to artificial superintelligence catastrophe for risk and decision analysis. Journal of Experimental & Theoretical Artificial Intelligence, vol. 29, no. 2, pages 397-414, DOI 10.1080/0952813X.2016.1186228.

Barrett, Anthony, 2016. False alarms, true dangers? Current and future risks of inadvertent U.S.-Russian nuclear war. RAND Corporation, document PE-191-TSF, DOI 10.7249/PE191.

Barrett, Anthony M. and Seth D. Baum, 2017. Risk analysis and risk management for the artificial superintelligence research and development process. In Victor Callaghan, James Miller, Roman Yampolskiy, and Stuart Armstrong (editors), The Technological Singularity: Managing the Journey. Berlin: Springer, pages 127-140.

Baum, Seth, 2015. Breaking down the risk of nuclear deterrence failure. Bulletin of the Atomic Scientists, 27 July.

Baum, Seth, 2015. Should nuclear devices be used to stop asteroids? Bulletin of the Atomic Scientists, 17 June.

Baum, Seth D., 2015. Risk and resilience for unknown, unquantifiable, systemic, and unlikely/catastrophic threats. Environment Systems and Decisions, vol. 35, no. 2 (June), pages 229-236, DOI 10.1007/s10669-015-9551-8.

Baum, Seth, 2015. Is stratospheric geoengineering worth the risk? Bulletin of the Atomic Scientists, 5 June.

Baum, Seth D. and Anthony M. Barrett, 2018. Global catastrophes: The most extreme risks. In Vicki Bier (editor), Risk in Extreme Environments: Preparing, Avoiding, Mitigating, and Managing. New York: Routledge, pages 174-184.

Baum, Seth D., 2014. The great downside dilemma for risky emerging technologies. Physica Scripta, vol. 89, no. 12 (December), article 128004, DOI 10.1088/0031-8949/89/12/128004.

Baum, Seth, 2014. Nuclear war, the black swan we can never see. Bulletin of the Atomic Scientists, 21 November.

Baum, Seth D. and Itsuki C. Handoh, 2014. Integrating the planetary boundaries and global catastrophic risk paradigms. Ecological Economics, vol. 107 (November), pages 13-21, DOI 10.1016/j.ecolecon.2014.07.024.

Haqq-Misra, Jacob, Michael W. Busch, Sanjoy M. Som, and Seth D. Baum, 2013. The benefits and harm of transmitting into space. Space Policy, vol. 29, no. 1 (February), pages 40-48, DOI 10.1016/j.spacepol.2012.11.006.

Barrett, Anthony M., Seth D. Baum, and Kelly R. Hostetler, 2013. Analyzing and reducing the risks of inadvertent nuclear war between the United States and Russia. Science and Global Security, vol. 21, no. 2, pages 106-133, DOI 10.1080/08929882.2013.798984.

Baum, Seth D., Timothy M. Maher, Jr., and Jacob Haqq-Misra, 2013. Double catastrophe: Intermittent stratospheric geoengineering induced by societal collapse. Environment Systems and Decisions, vol. 33, no. 1 (March), pages 168-180, DOI 10.1007/s10669-012-9429-y.

Baum, Seth, 2012. Anticipating catastrophe. FutureChallenges, 26 September.