GCRI is a nonprofit and nonpartisan think tank that analyzes the risk of events that could significantly harm or even destroy human civilization at the global scale.

Statusquotastrophe: Interviews with US Public Reveal Dark Trend

Catastrophic risk is generally understood to be the risk of some extreme negative deviation from the status quo. This GCRI commentary presents original research finding that many members of the US public have a very different view: the status quo is itself catastrophic. The finding is based on interviews with people from across the US.

The Importance of Statusquotastrophe—And Social Science Research

Recent research by Charlton and Marissa Yingling introduces the concept of statusquotastrophe, in which the status quo is perceived to be catastrophic. This GCRI commentary explains the importance of this concept and the social science research used to discover it. It suggests a major shift in how we think about catastrophic risk and contemporary society.

Recent Commentaries

One Big Question About Contrails and Climate Change

Contrails are the clouds formed by some airplane flights. They are made out of water, a greenhouse gas, and cause about 1-2% of climate change. Avoiding them may be a promising climate solution. This GCRI commentary asks: is progress on contrails avoidance limited more by the ability to avoid contrails or the motivation to actually do so?

Government Procedure and Global Catastrophic Risk

Global catastrophic risk is heavily affected by the procedures governments use to make decisions. This GCRI commentary calls for a nuanced understanding instead of one-size-fits all procedures. It discusses procedure changes across US history from the New Deal through the anti-establishment backlash to contemporary debates about abundance.

Democratic Participation and Global Catastrophic Risk

US policy advocacy today mostly consists of insider engagement with government officials, in contrast with the public mobilization of earlier eras. This GCRI commentary argues that public mobilization, though difficult, would be more democratic and enable larger policy wins. It proposes a policy agenda designed for broad public appeal.

Recent Research

Climate Change, Uncertainty, and Global Catastrophic Risk

Is climate change a global catastrophic risk? This paper, published in the journal Futures, addresses the question by examining the definition of global catastrophic risk and by comparing climate change to another severe global risk, nuclear winter. The paper concludes that yes, climate change is a global catastrophic risk, and potentially a significant one.

Assessing the Risk of Takeover Catastrophe from Large Language Models

For over 50 years, experts have worried about the risk of AI taking over the world and killing everyone. The concern had always been about hypothetical future AI systems—until recent LLMs emerged. This paper, published in the journal Risk Analysis, assesses how close LLMs are to having the capabilities needed to cause takeover catastrophe.

On the Intrinsic Value of Diversity

Diversity is a major ethics concept, but it is remarkably understudied. This paper, published in the journal Inquiry, presents a foundational study of the ethics of diversity. It adapts ideas about biodiversity and sociodiversity to the overall category of diversity. It also presents three new thought experiments, with implications for AI ethics.

Manipulating Aggregate Societal Values to Bias AI Social Choice Ethics

AI ethics concepts like value alignment propose something similar to democracy, aggregating individual values into a social choice. This paper, published in the journal AI and Ethics, explores the potential for AI systems to be manipulated in ways analogous to sham elections in authoritarian regimes.

From the Archive

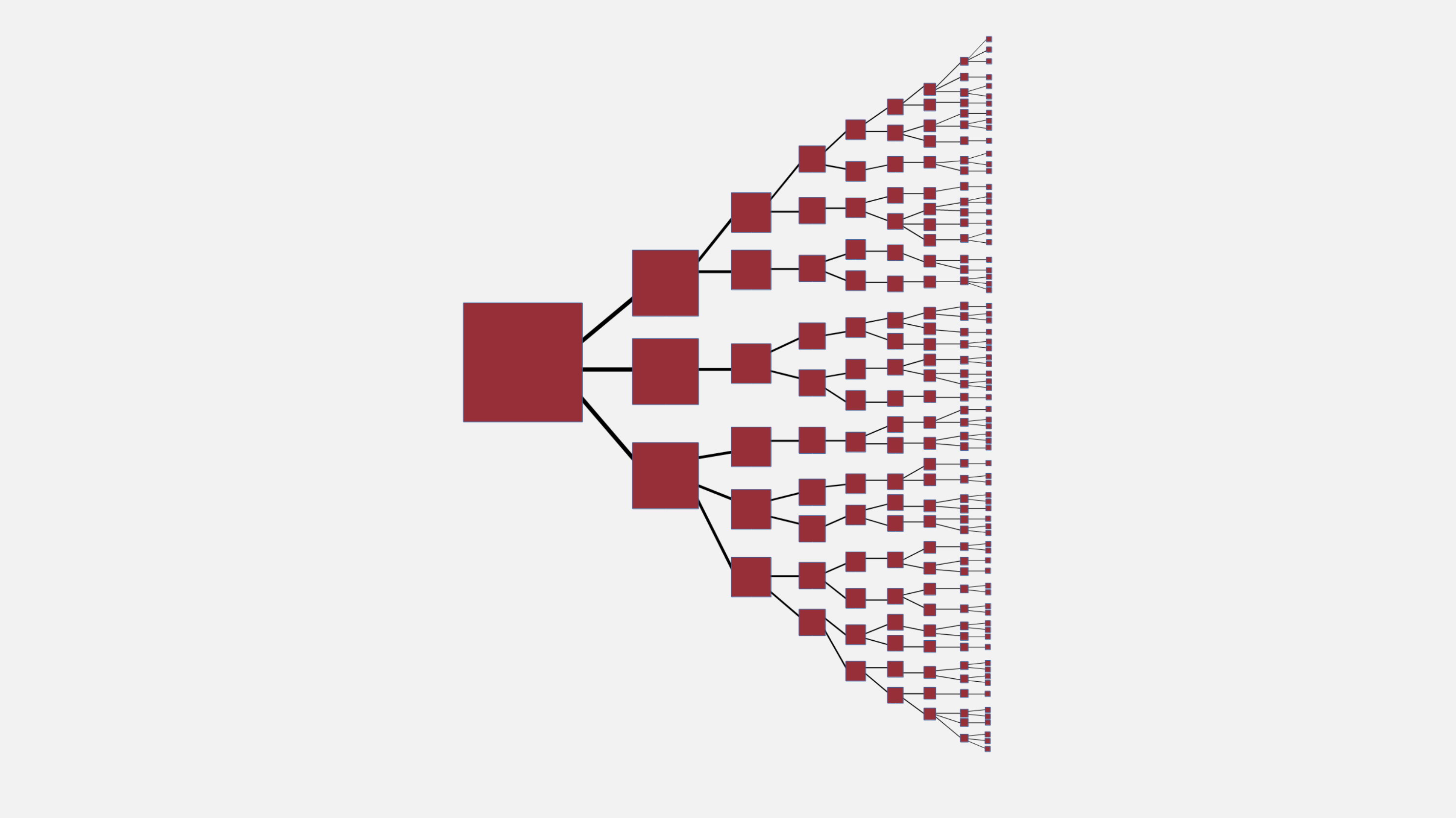

2020 Survey of Artificial General Intelligence Projects for Ethics, Risk, and Policy

An AI system with wide-ranging general intelligence could bring massive benefits or catastrophic harms, if it is built. This report surveys the global space of projects seeking to build artificial general intelligence. It finds 72 projects across 37 countries, with wide variation in parameters of relevance to AI governance.

Long-Term Trajectories of Human Civilization

What will be the fate of human civilization millions, billions, or trillions of years into the future? This paper, written by an international group of 14 scholars and published in the journal Foresight, studies several possible long-term trajectories of human civilization, including the radically good and catastrophically bad.

A Model for the Probability of Nuclear War

There has only been one nuclear war: World War II. That’s not enough data to calculate the probability. Instead, this GCRI report presents a probability model rooted in 14 nuclear war scenarios. It includes a dataset of 60 historical incidents, such as the Cuban missile crisis, that may have threatened to escalate into nuclear war.

The Far Future Argument for Confronting Catastrophic Threats to Humanity: Practical Significance and Alternatives

Global catastrophes could threaten humanity into the distant future, but many people don’t particularly care. This paper, published in the journal Futures, examines strategies for addressing global catastrophic risk that do not require concern about the far future. If the risks are addressed, it may not matter why people address them.

GCRI conducts interdisciplinary research across the global catastrophic risks, with emphasis on ten topics.

GCRI Topics

Concern about AI catastrophe has existed for many decades. It has recently become a lightning rod for debate alongside the growing societal prominence of AI technology. GCRI’s AI research helps to ground the discussion in the underlying character of the risk and develop solutions that help across a range of AI issues. GCRI is also active in AI ethics, providing perspective on the question of what values to build into AI systems.

Human civilization emerged during the Holocene, which for 10,000 years has brought relatively stable and favorable environmental conditions. Now, human activity is threatening those conditions, causing climate change, biodiversity loss, ecosystem destruction, and more. GCRI’s research assesses the global catastrophic risk posed by these environmental changes and advances practical solutions to reduce the risk.

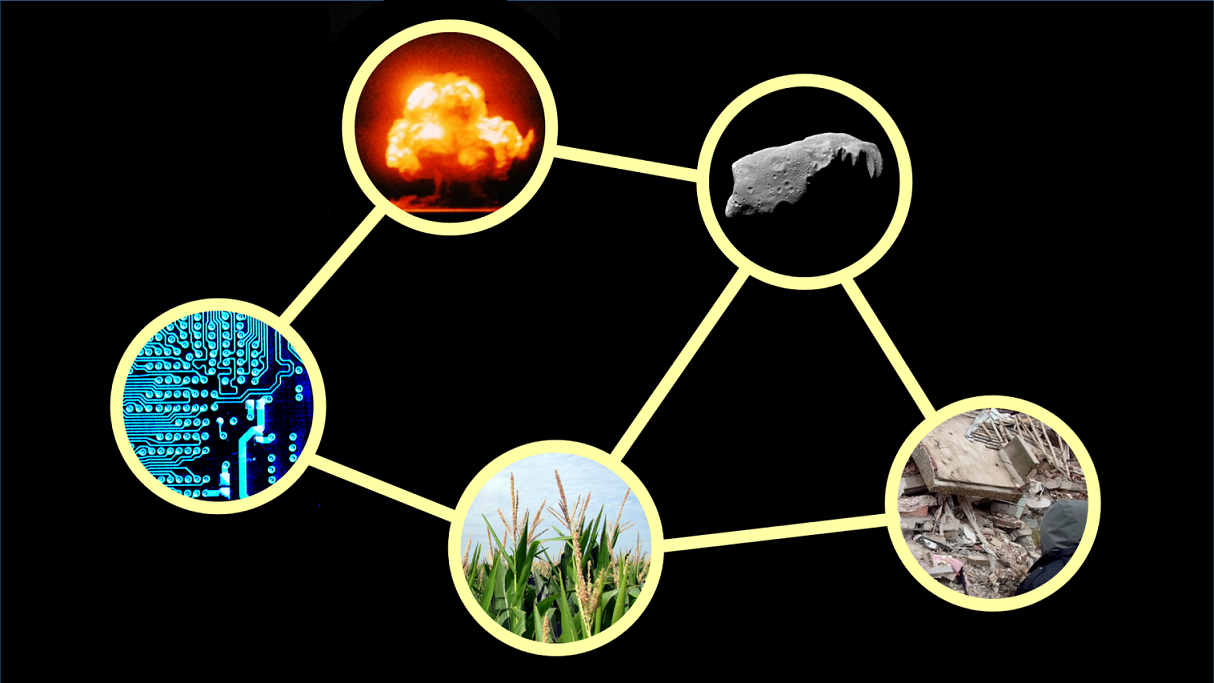

Instead of thinking in terms of specific global catastrophic risks in isolation from each other, it is often better to think holistically about the entirety of global catastrophic risk. This includes interconnections between the risks and actions that can affect multiple risks. GCRI was specifically founded as an organization dedicated to studying the whole of global catastrophic risk and this remains a priority for GCRI’s ongoing work.

Global catastrophic risk is clearly a major ethical issue, though there are many views on how much to prioritize the risk and what to do about it. GCRI’s ethics research seeks to clarify the ethical foundations of global catastrophic risk and clarify the relation between global catastrophic risk and other issues. This research aims to guide actions to address global catastrophic risk while accounting for related issues.

The world still possess about 10,000 nuclear warheads, enough to cause massive global devastation. As long as adversarial relations between nuclear-armed countries persist, there will be some chance of escalation to nuclear war. To help address the risk, GCRI contributes risk analysis of the probability and severity of nuclear war and solutions for reducing the risk, especially for global effects such as nuclear winter.

Global catastrophic risk may be of astronomical significance: a global catastrophe could prevent Earth-originating civilization from expanding into the cosmos. Additionally, there are threats from outer space to life on Earth, such as asteroids and space weather. GCRI’s research relates global catastrophic risk to the fate of the universe and analyzes space-based risks in terms of their potential for global catastrophe.

Pandemics have caused many of the most severe events in human history, COVID-19 included. Modern biotechnology can help reduce the risk while also creating new risks. GCRI’s research on pandemics and biorisk applies risk and decision analysis techniques and relates the risks to the broader domain of global catastrophic risk. GCRI has been less active on this topic but it remains an important domain to address.

Global catastrophic risk is defined by its extreme severity. The severity has two major components: the resilience of human civilization to global catastrophes and, if civilization collapses, how successfully the survivors would recover. Both of these components have been alarmingly understudied. GCRI’s research assesses the severity of global catastrophes and assesses opportunities to mitigate them.

Decision analysis can help to identify decision options and their consequences, including their effect on risks. Risk analysis can characterize potential harms and quantify them in terms of their probability and severity. GCRI applies risk and decision analysis methods to global catastrophic risk. GCRI’s approach balances between the value of quantitative analysis and the pitfalls of quantifying deeply uncertain parameters.

To successfully reduce global catastrophic risk, it is necessary to formulate solutions that would reduce the risk if implemented and that will actually be implemented. This often involves engaging with people and institutions that may not have global catastrophic risk as a priority. GCRI’s research develops solutions that account for the priorities of the relevant decision-makers to achieve significant reductions in the risk.

Founded in 2011, GCRI is one of the oldest active organizations working on global catastrophic risk. We provide intellectual and moral leadership to support the broader field of global catastrophic risk.

About GCRI

About

Read about GCRI’s mission, history, organization structure, finances, and more.

People

The GCRI team is led by senior experts in the field of global catastrophic risk.

Official Statements

GCRI publishes occasional statements about important current events, issues within the field, and organizational matters.

Press

GCRI welcomes inquiries from journalists and creators to advance public understanding of global catastrophic risk.

Join GCRI and many others around the world in helping to address humanity’s gravest threats.

How to Get Involved

Learn More

Continue reading the GCRI website to learn more about GCRI, about global catastrophic risk, and more.

Stay Informed

View GCRI publications and organization updates or subscribe to the GCRI newsletter.

Connect

GCRI’s annual Advising and Collaboration Program welcomes people interested in global catastrophic risk.

Donate

GCRI is nonprofit and relies on grants and donations to fund its activities. Visit our donate page to contribute.